Many companies have a need for monitoring their VMWare infrastructure and almost always the only alternative that shows up is the extremly extensive and costly Veeam Management Pack for System Center.

But is this really the only alternative and do you need an MP this extensive? Some organisations have smaller environments and the primary tool to monitor and troubleshoot VMWare is vCenter.

That’s why I created my own VMWare Management. This MP has a very light load, captures the “defined alarms” out of your vCenter and translates them into SCOM alerts.

For those interested, feel free to leave me a note. I’ll be glad to give some more insights.

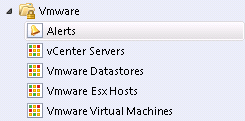

Some screenshots:

Folders created after import

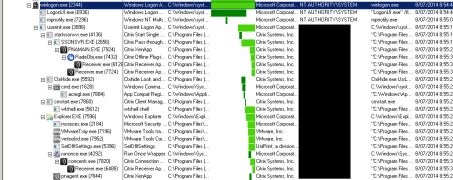

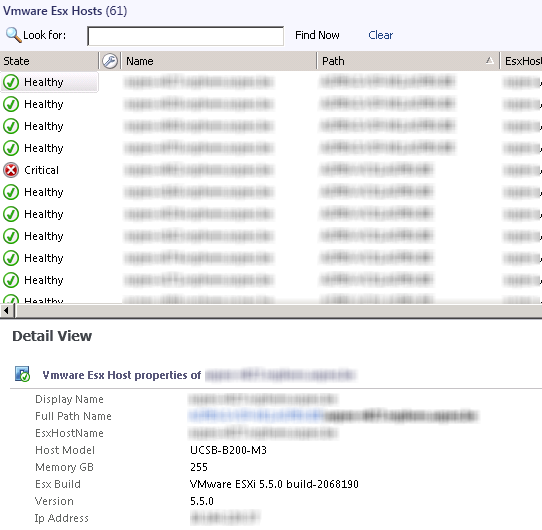

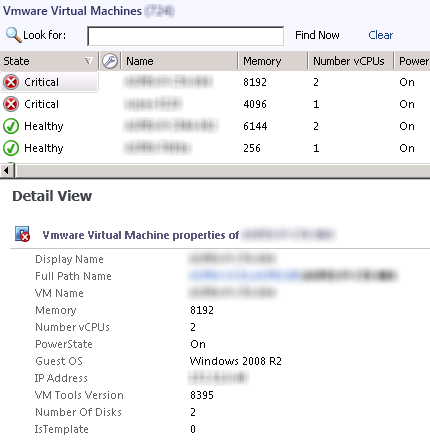

Esx Host, VM and Datastore Discoveries

All these are discovered with a large number of properties.

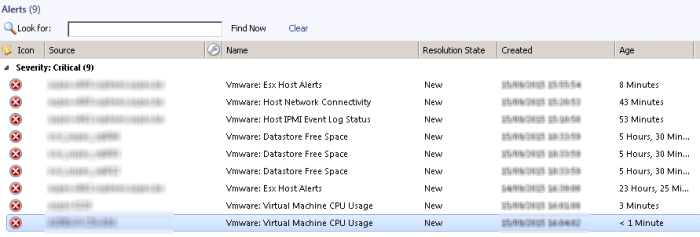

Alert View

As already mentioned every configured alert in your vCenter can be captured into your SCOM environment.

For those interested, I’ve made the Management Pack available through the following link https://1drv.ms/f/s!AvZD1kbn8-n_am2uLPmtw3JWrZk

Feel free to leave a comment.